A new study has revealed that large language models (LLMs) display noticeably different cultural tendencies depending on the language in which they’re prompted — particularly between English and Chinese. The findings raise important questions about AI neutrality, cultural representation, and the localization of global AI systems.

Cultural Shifts in AI Responses

The research, conducted by a team of computer scientists and linguists, tested LLMs like GPT and others using the same set of questions, first in English and then translated into Chinese. The responses showed significant variation in tone, opinion, and framing — even when the prompts were semantically identical.

For example:

- In English, the model tended to emphasize individualism, personal freedom, and critical thinking.

- In Chinese, responses leaned more toward collectivism, social harmony, and respect for authority or tradition.

These differences reflect underlying cultural norms embedded in the datasets the models were trained on, suggesting that LLMs are not entirely “neutral” but instead reflect the dominant values of the language and sources they ingest.

Why It Matters

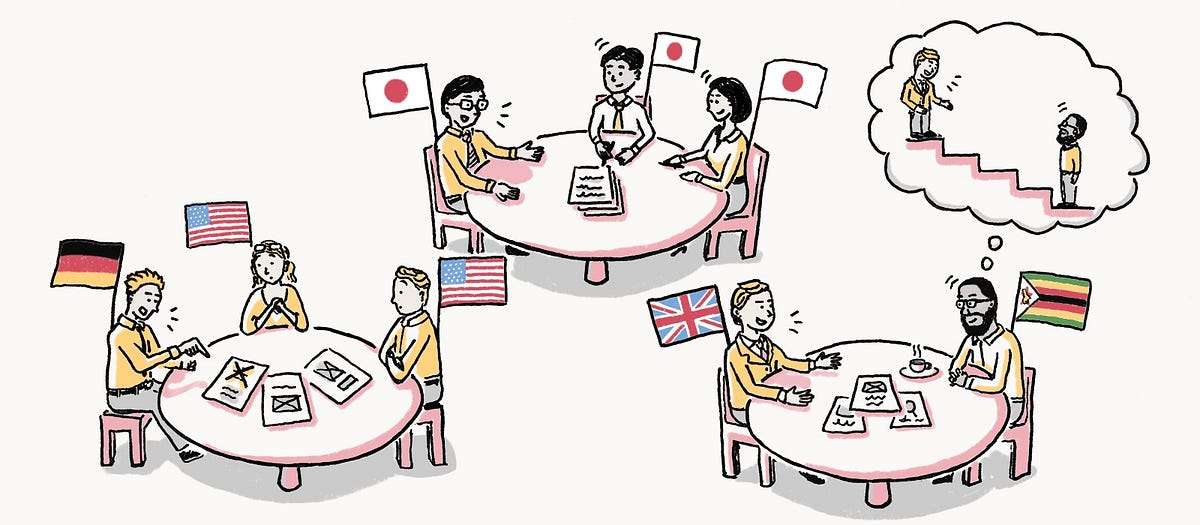

As AI becomes increasingly multilingual and global in its applications, these findings underscore the importance of understanding how language models adapt — or fail to adapt — across cultures. A system trained mainly on Western data may unintentionally promote biases when deployed in other regions, and vice versa.

This has real-world implications for:

- International policy and legal translation

- Global education tools

- Cross-cultural customer service

- AI-generated content for media and marketing

Toward More Culturally-Aware AI

Experts say this is not necessarily a flaw, but a design consideration. “Language models are cultural mirrors,” one researcher noted. “They reflect not just the words, but the worldviews embedded in language.”

To improve cultural neutrality and sensitivity, developers may need to:

- Include more balanced, diverse training data

- Use culture-specific fine-tuning

- Offer disclosure or controls for how content is generated in different languages

Final Thoughts

The study highlights a critical challenge for the future of AI: How can we build systems that are globally intelligent, yet culturally respectful? As LLMs are integrated into more decision-making and creative processes, the need for cross-cultural awareness will only grow.