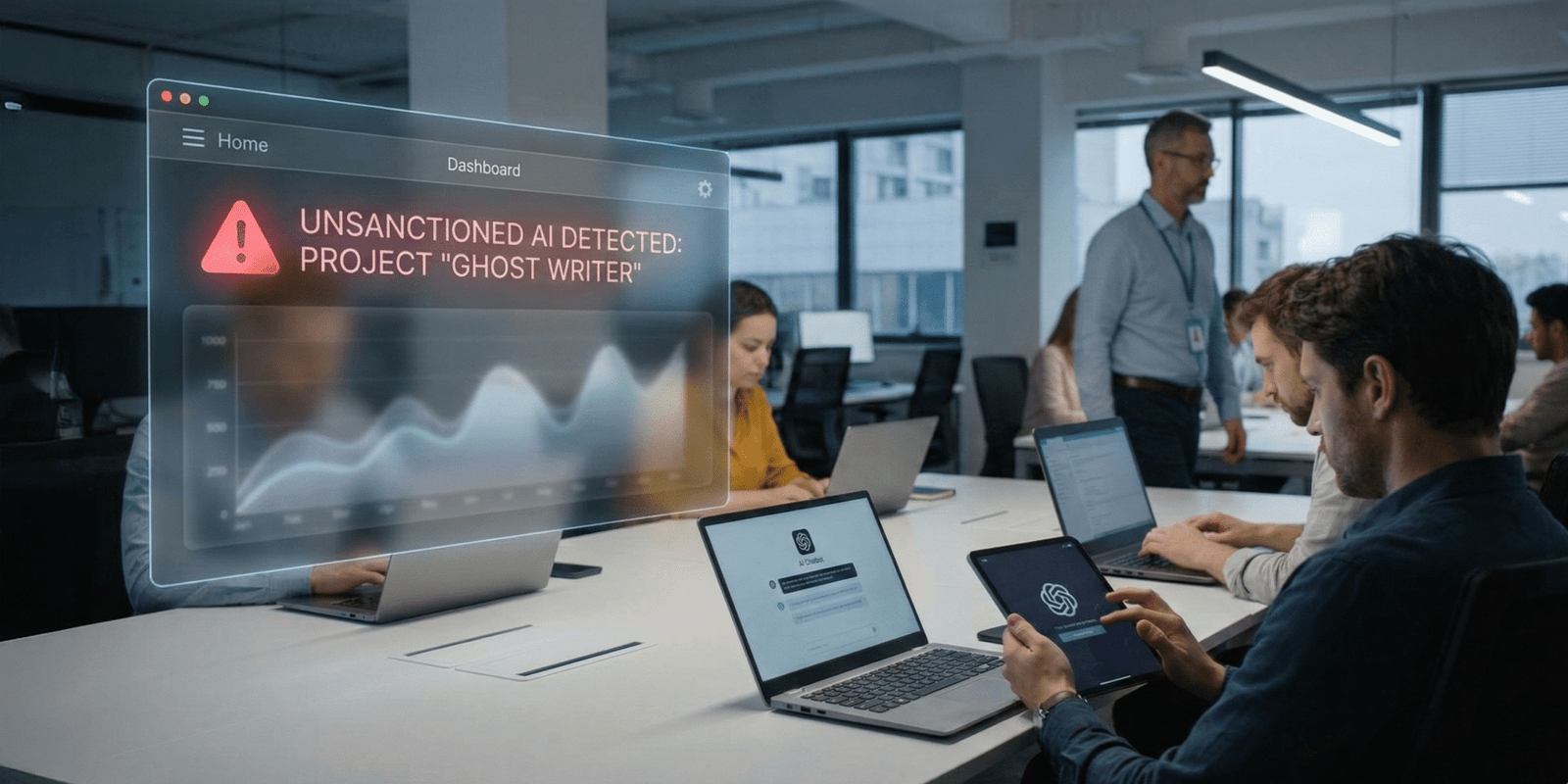

Shadow AI in workplaces is becoming one of the most urgent challenges for modern organisations. As companies adopt structured digital transformation strategies, employees often turn to unsanctioned AI tools to speed up tasks, simplify research, or automate small pieces of work. This creates a silent layer of technology operating outside IT visibility. At first glance, this might look harmless. However, as businesses scale, unmonitored AI usage opens the door to security risks, data exposure, and compliance gaps. Understanding this phenomenon is essential for leaders who want to protect their systems while still encouraging innovation.

The rise of hybrid work has accelerated this behavior. Employees work across devices, access cloud services independently, and experiment with new productivity tools without informing IT. When tight deadlines or complex tasks appear, many workers lean on AI tools they discover online because they feel faster or more flexible than approved systems. This growing pattern forms the core of Shadow AI in workplaces. And while it highlights a culture of initiative and adaptability, it also reveals vulnerabilities that organizations cannot ignore.

The Growing Reality Behind Shadow AI

The concept of “shadow technology” is not new. Teams have used unauthorized software for years. The difference today is speed. AI tools are now accessible with a single click, no installation required. Workers can generate reports, write code, or analyse data instantly, often without realizing they are exposing sensitive information. This is exactly why Shadow AI in workplaces is rising more quickly than IT teams can track.

Consider a simple example: a marketer uses an unapproved AI writing tool to prepare a campaign brief. Although the task seems small, the tool might store uploaded customer insights on external servers. Another example is a developer testing external code-generation tools without checking licensing rules. These patterns create invisible risks hidden inside everyday workflows. The challenge is not the intent—it’s the lack of oversight.

Why Employees Resort to Unapproved AI Tools

To understand Shadow AI, leaders must understand motivation. Employees are not trying to break rules; they are trying to work efficiently. Several factors drive this trend:

- Speed and convenience. AI tools help users finish tasks quickly, especially when company-approved tools feel slow.

- Lack of clarity. Many organizations do not clearly communicate which AI tools are allowed, so employees choose their own.

- Experimentation culture. Curiosity drives workers to explore tools that promise better results.

- Insufficient training. Without proper guidance, employees do not fully understand the risks of using external AI platforms.

- Pressure to perform. High workloads push employees to seek shortcuts that help them meet deadlines.

When these motivators combine, Shadow AI becomes a natural outcome. Teams innovate faster but without guardrails to protect company data.

Risks Companies Face When Shadow AI Grows Unchecked

The dangers of Shadow AI in workplaces are often hidden until a problem occurs. Even small interactions with unapproved AI tools can create serious vulnerabilities. Some of the most common risks include:

- Data exposure. External AI tools may store prompt and output data in ways that violate company policies.

- Compliance violations. Industries like finance, healthcare, and consulting must follow strict data rules. Unmonitored tools can break them instantly.

- Inaccurate outputs. AI tools vary in reliability. Using the wrong one can produce incorrect reports, flawed analyses, or buggy code.

- Integration conflicts. Mixing unauthorized tools with company workflows can break processes or create inconsistent data.

- Security gaps. Malicious tools disguised as AI services can install malware or capture login credentials.

These risks do not just affect one department. They can influence customer trust, regulatory standing, and even long-term business strategy.

The Productivity Side of Shadow AI

Despite the risks, Shadow AI also highlights an important truth: employees want smarter tools. The popularity of these tools shows that people are actively searching for ways to improve speed, clarity, and output quality. Instead of shutting down usage completely, companies can learn from these patterns. Shadow AI in workplaces signals areas where internal tools fall short. When used correctly, this insight can help IT teams introduce better systems that keep productivity high and risks under control.

A balanced approach encourages innovation without opening the door to compliance issues. Companies that embrace this mindset often build healthier technology cultures.

How Organizations Can Respond Without Killing Innovation

Leaders who want to manage Shadow AI effectively must avoid extremes. Over-restricting tools frustrates employees. Ignoring unauthorized usage puts the business at risk. The solution lies in a structured, thoughtful approach.

1. Build clear AI usage policies.

Teams should know exactly which tools are allowed, which are restricted, and why. Transparency empowers responsible decisions.

2. Provide approved AI alternatives.

When employees have access to reliable internal AI solutions, they are less likely to explore unsafe options.

3. Offer training and awareness programs.

Help teams understand how AI tools handle data, process inputs, and store information.

4. Monitor system activity carefully.

Analytics platforms can track unsanctioned tool interactions, making Shadow AI easier to identify without disrupting workflows.

5. Foster a culture of communication.

Encourage employees to suggest tools they find helpful. This reduces secrecy and makes evaluation easier.

By taking these steps, organizations can reduce risk without stifling productivity or creativity.

A Realistic Path Forward

The goal is not to eliminate Shadow AI entirely—because that is unrealistic. Instead, companies should aim to guide it. When handled correctly, Shadow AI becomes an opportunity to modernize systems, support smarter work patterns, and create a more innovative environment. The key is establishing the right balance between freedom and responsibility.

Conclusion

Shadow AI in workplaces reflects a natural shift in how teams use technology. Employees want faster tools, smoother workflows, and smarter ways to complete tasks. Instead of resisting this movement, companies can use it as a signal to improve internal solutions, develop clearer guidelines, and strengthen data governance. With balanced oversight, modern organizations can encourage creativity, protect information, and build technology environments that empower teams safely. As businesses continue to innovate, acknowledging and managing Shadow AI becomes essential for long-term stability, trust, and growth.