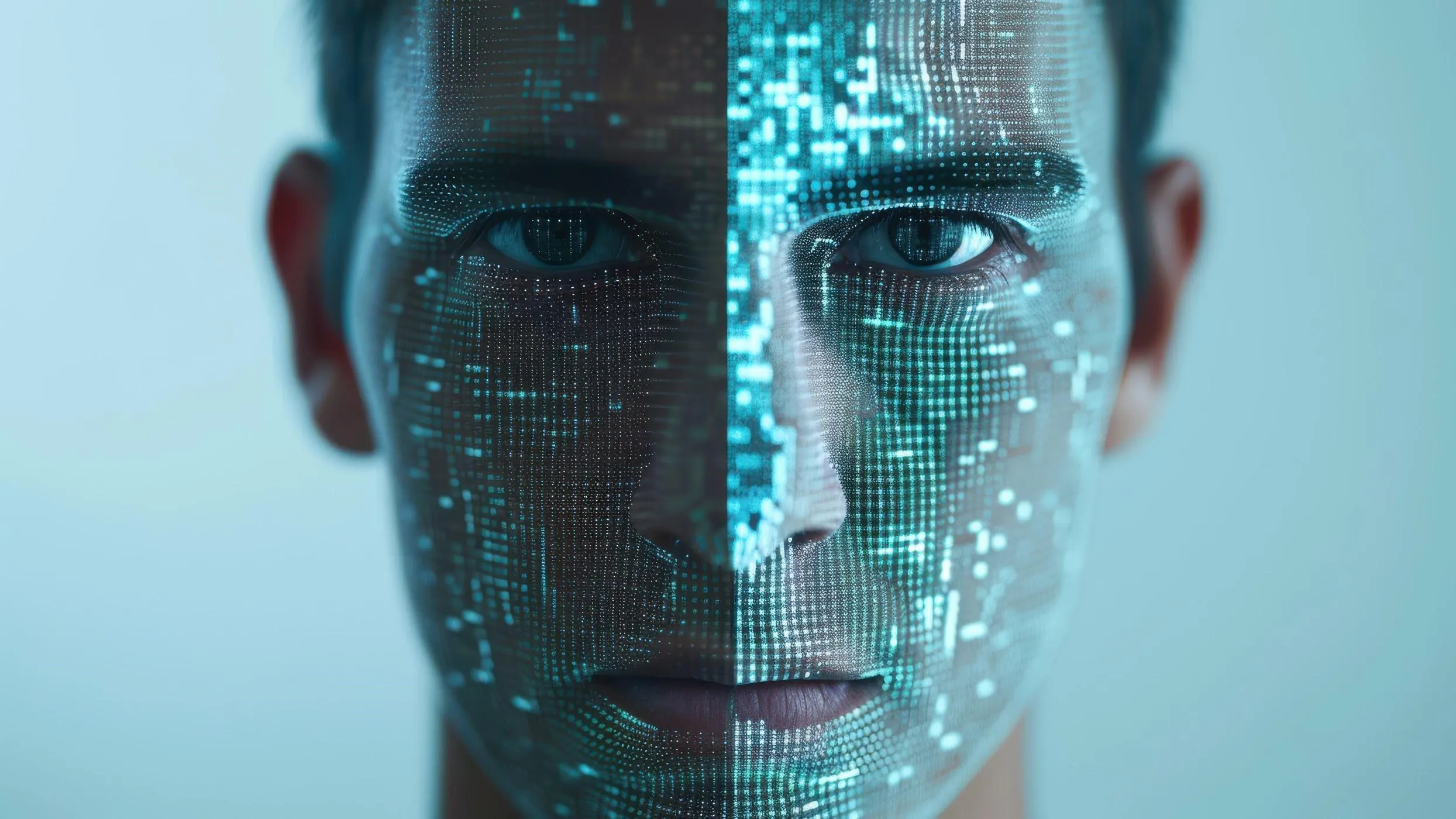

The rapid evolution of artificial intelligence has brought both breakthroughs and challenges—but perhaps none more alarming than the rise of deepfakes. These hyper-realistic, AI-generated impersonations of voices, faces, and actions are increasingly being used for malicious purposes, posing serious threats to governments, businesses, and public trust.

What Are Deepfakes?

Deepfakes use advanced machine learning techniques, particularly generative adversarial networks (GANs), to create convincing fake videos or audio recordings that mimic real individuals. From doctored political speeches to fraudulent video calls from CEOs, the sophistication of deepfakes has made it nearly impossible for the average viewer to tell fact from fiction.

Threats to National Security

Governments are particularly vulnerable to deepfake threats. Fake videos of politicians making inflammatory statements or appearing in compromising situations can sow political chaos, manipulate elections, or even trigger diplomatic crises. Intelligence agencies worldwide are ramping up efforts to detect and counteract deepfakes as part of national cybersecurity priorities.Recently, a deepfake video impersonating a government official in Europe went viral, causing confusion during an election campaign—highlighting the real-world impact of such digital fabrications.

Corporate Risks and Fraud

The business world is also under siege. There have been multiple reported cases where fraudsters used AI-generated voice deepfakes to impersonate CEOs or executives, tricking employees into transferring large sums of money or revealing sensitive information. In one notable incident, a UK-based company lost over $240,000 after scammers used a deepfake voice to pose as the company’s chief executive.

Beyond financial loss, companies also face reputational damage if deepfakes are used to spread false information or manipulate stock prices.

Erosion of Trust and Information Integrity

Perhaps the most insidious effect of deepfakes is their ability to erode public trust. As deepfakes become more widespread, people may begin to question the authenticity of everything they see or hear—what experts are calling the “liar’s dividend.” Even real videos can be dismissed as fakes, making truth itself a casualty of this AI arms race.

Fighting Back: Tech and Policy Solutions

To combat deepfakes, tech companies are investing in detection tools powered by AI that can identify digital manipulations. Meanwhile, governments are drafting regulations and penalties for the malicious use of synthetic media. Public awareness and digital literacy are also key to reducing the impact.

Final Thought

Deepfakes represent one of the most dangerous and fast-moving challenges in the AI landscape. If left unchecked, they could undermine democracy, economic stability, and public trust. The world must act swiftly and collectively—combining technology, regulation, and education—to stay ahead of this digital deception.